More Information

Submitted: February 18, 2025 | Approved: March 05, 2025 | Published: March 06, 2025

How to cite this article: Trivedi GJ. Survey of Advanced Image Fusion Techniques for Enhanced Visualization in Cardiovascular Diagnosis and Treatment. J Clin Med Exp Images. 2025; 9(1): 001-009. Available from:

https://dx.doi.org/10.29328/journal.jcmei.1001034.

DOI: 10.29328/journal.jcmei.1001034

Copyright License: © 2025 Trivedi GJ. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Keywords: Cardiovascular imaging; Image fusion; Wavelet transform; Principal component analysis; Deep learning; Diagnostic imaging; Medical image processing

Survey of Advanced Image Fusion Techniques for Enhanced Visualization in Cardiovascular Diagnosis and Treatment

Gargi J Trivedi*

Department of Applied Mathematics, Faculty of Engineering and Technology, The Maharaja Sayajirao University of Baroda, Vadodara, India

*Address for Correspondence: Gargi J Trivedi, Department of Applied Mathematics, Faculty of Engineering and Technology, The Maharaja Sayajirao University of Baroda, Vadodara, India, Email: [email protected]; [email protected]

Cardiovascular Diseases (CVDs) remain a major global health concern, necessitating accurate and comprehensive diagnostic techniques. Traditional medical imaging modalities, such as CT angiography, PET, MRI, and ultrasound, provide crucial but limited information when used independently. Image fusion techniques integrate complementary modalities, enhance visualization, and improve diagnostic accuracy. This paper presents a theoretical study of advanced image fusion methods applied to cardiovascular imaging. We explore wavelet-based, Principal Component Analysis (PCA), and deep learning-driven fusion models, emphasizing their theoretical underpinnings, mathematical formulation, and potential clinical applications. The proposed framework enables improved coronary artery visualization, cardiac function assessment, and real-time hemodynamic analysis, offering a non-invasive and highly effective approach to cardiovascular diagnostics.

MSC Codes: 68U10,94A08,92C55,65T60,62H25,68T07.

Cardiovascular Diseases (CVDs) are the leading cause of mortality worldwide, accounting for nearly 32% of global deaths. Early and accurate diagnosis is critical for effective intervention, where medical imaging plays a pivotal role by providing non-invasive visualization of cardiac structures, coronary arteries, and vascular abnormalities [1]. However, no single imaging modality is sufficient to capture all essential diagnostic details due to inherent limitations:

Computed Tomography Angiography (CTA) offers high-resolution coronary imaging but lacks functional information [2]. Positron Emission Tomography (PET) provides metabolic and perfusion data but has poor spatial resolution [3]. Magnetic Resonance Imaging (MRI) offers excellent soft tissue contrast but is suboptimal for real-time visualization [4]. Ultrasound (US) enables real-time imaging of blood flow but has limited penetration and resolution [5].

To address these challenges, image fusion techniques integrate complementary imaging modalities to produce enhanced diagnostic images that combine structural, functional, and dynamic information. Fused images improve visualization of coronary arteries, quantitative cardiac function analysis, and hemodynamic flow assessment, aiding in accurate diagnosis and treatment planning. A basic representation of image fusion is shown in Figure 1.

Figure 1: Image Fusion Two images of different modalities.

This paper provides a comprehensive analysis of advanced image fusion techniques applied to cardiovascular imaging. Unlike previous studies, which focus on specific fusion algorithms, this work (1) systematically compares classical and modern deep learning-driven fusion methods, (2) integrates mathematical foundations with practical applications, and (3) highlights emerging trends and challenges in multimodal cardiovascular imaging. We examine fusion techniques such as wavelet-based methods, Principal Component Analysis (PCA), and deep learning frameworks, evaluating their advantages, limitations, and clinical implications. By bridging the gap between theoretical development and practical implementation, this study serves as a valuable resource for researchers and clinicians aiming to enhance cardiovascular disease diagnosis through image fusion.

Medical image fusion has gained significant attention in recent years, particularly in cardiovascular diagnostics, where integrating structural and functional imaging provides crucial insights for clinical decision-making. This section discusses key advancements in image fusion methods, their applications in cardiovascular imaging, and recent computational approaches that enhance multi-modal fusion techniques.

Image fusion in medical imaging

Image fusion involves combining data from multiple imaging modalities to enhance visualization and improve diagnostic accuracy. Different fusion techniques have been developed over time, categorized based on the level at which integration occurs. In pixel-level fusion [6], raw pixel values from different modalities are directly merged using techniques such as intensity averaging or maximum selection. Feature-level fusion, on the other hand, extracts important image features and combines them using mathematical transformations [7], which helps retain significant details. Decision-level fusion integrates outputs from multiple classifiers or expert interpretations, ensuring more reliable diagnostic outcomes [8,9].

Earlier methods like simple averaging and intensity-based fusion often led to information loss. To address these limitations, multi-resolution analysis techniques such as wavelet transforms were introduced. These approaches enabled fusion at different frequency scales, significantly improving contrast and preserving critical features. Wavelet-based fusion, by decomposing images into multiple frequency bands before reconstruction, proved to be more effective than basic intensity-based methods [10-13]. Further advancements, particularly with Non-Subsampled Contourlet Transform (NSCT), have been instrumental in vascular imaging applications, as they enhance directional information [14,15].

More recently, deep learning-based fusion techniques have gained prominence. Convolutional Neural Networks (CNNs) have been used to extract and merge relevant features, surpassing traditional methods in terms of accuracy. By automatically learning optimal fusion representations, CNN-based approaches reduce dependency on predefined mathematical models and improve overall fusion quality [1,16,17].

Multi-modal imaging in cardiovascular diagnosis

Cardiovascular imaging benefits significantly from multi-modal fusion, as different imaging techniques provide complementary diagnostic information [18]. For instance, Computed Tomography Angiography (CTA) offers detailed anatomical visualization of coronary arteries, while Positron Emission Tomography (PET) highlights areas of myocardial ischemia [19]. When these modalities are fused, they generate a more comprehensive diagnostic image, improving the accuracy of coronary artery disease assessment. The fusion process can be expressed mathematically as shown in Eq. (1):

(1)

where α is a weighting factor that optimizes the balance between anatomical and functional information. Similarly, MRI provides superior soft tissue contrast and allows for ventricular function analysis, whereas Doppler ultrasound offers real-time visualization of blood flow dynamics. Fusing these two modalities enhances hemodynamic assessments, making it particularly useful for diagnosing heart valve disorders and cardiomyopathies [20].

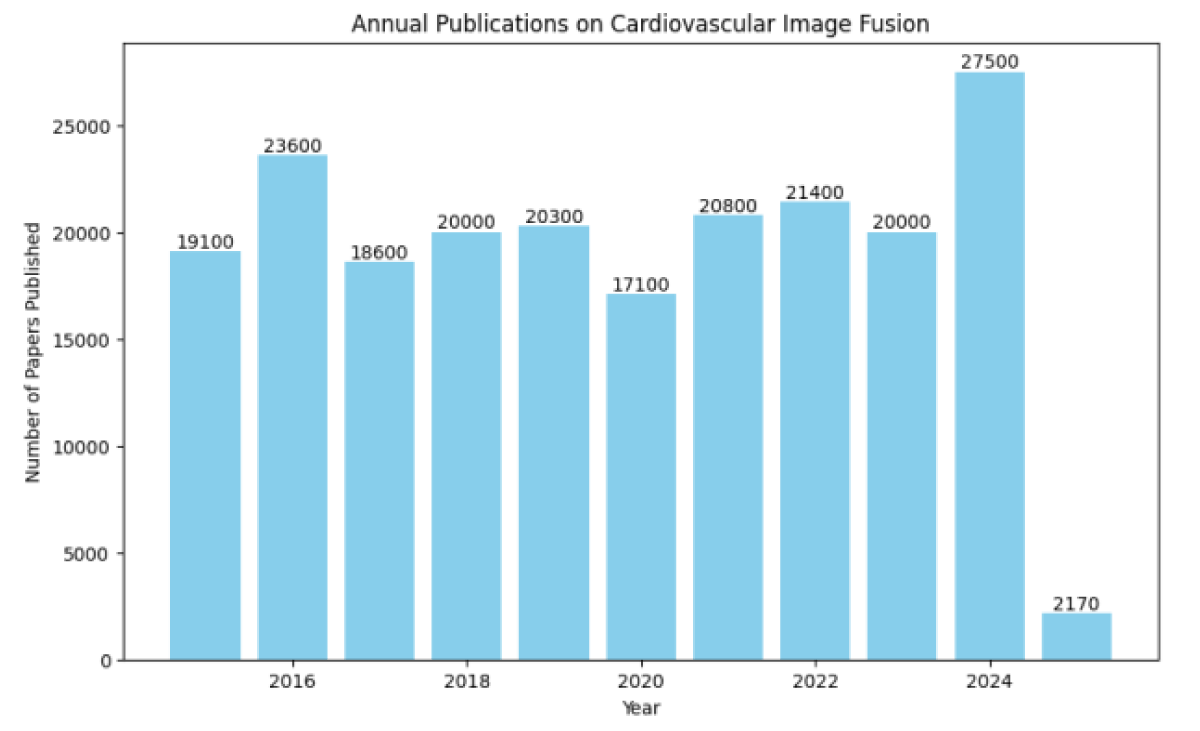

Figure 2 illustrates the trend in published research on cardiovascular image fusion between 2015 and 2025, with projected estimates for the last three years. Recent studies have also explored deep learning-driven fusion approaches for cardiovascular applications. CNN-based models extract both spatial and temporal features, enabling automatic learning of fusion rules without relying on predefined mathematical expressions. These approaches show great promise in improving diagnostic accuracy and reducing the reliance on manual feature selection [21].

Figure 2: Annual Publications on Cardiovascular Image Fusion (2015–2025*).

Computational methods for image fusion

Several computational techniques have been introduced to enhance fusion quality and efficiency. One widely used approach is wavelet transform, which enables multi-scale decomposition and ensures that fine details are preserved in the fused image. This method involves combining wavelet coefficients from source images using weighted fusion rules, followed by reconstruction using an inverse wavelet transform.

Principal Component Analysis (PCA) is another effective technique that reduces image dimensionality while retaining essential features. By computing the eigenvectors of the covariance matrix, PCA determines the most significant components, allowing for a more efficient fusion process [22].

With the advancement of deep learning, CNN-based fusion techniques have demonstrated remarkable improvements in extracting high-level features from multiple imaging modalities. Encoder-decoder architectures further refine spatial alignment and feature integration, leading to high-quality fusion results.

Challenges and future directions

Despite notable progress in image fusion, several challenges remain that hinder its widespread clinical adoption. One major issue is spatial misalignment, as differences in resolution and orientation between imaging modalities necessitate robust image registration techniques. Computational complexity is another concern, particularly with deep learning-based methods that require substantial processing power, limiting their use in real-time clinical applications. Additionally, the lack of standardized evaluation metrics continues to be a challenge. While metrics such as Structural Similarity Index (SSIM) and Visual Information Fidelity (VIF) are widely used, they still require further refinement to ensure consistency in medical imaging assessments.

Future research should focus on the development of real-time AI-driven fusion techniques, improved automated image registration, and the seamless integration of fusion methods into clinical decision-support systems. While traditional approaches like wavelet transform and PCA have been extensively applied, deep learning-based methods are emerging as the most promising alternatives. To ensure their effective integration into clinical workflows, further efforts should be directed toward improving computational efficiency and automation.

Theoretical foundation of image fusion

Image fusion is a technique used to integrate multiple images obtained from different modalities, producing a single, enhanced representation that improves diagnostic accuracy. In cardiovascular imaging, fusion techniques play a crucial role in combining structural, functional, and dynamic information from modalities such as CT angiography (CTA), PET, MRI, and ultrasound. This section presents the fundamental mathematical models and methodologies underlying image fusion, emphasizing their theoretical and practical aspects.

Mathematical formulation of image fusion

Mathematically, image fusion can be expressed as a function that combines two source images while retaining essential features from both. Let I1(x, y) and I2(x, y) represent two images obtained from different imaging modalities. The fused image If(x, y) is obtained using a fusion function F, which ensures optimal feature extraction while minimizing redundancy:

If(x, y) = F(I1(x, y), I2(x, y)) (2)

Eq. 2 describes the general form of the fusion process, where FFF represents a fusion rule that determines how information is merged. An ideal fusion method must satisfy three fundamental properties: structural preservation, feature enhancement, and noise minimization. Structural preservation ensures that essential edges, textures, and spatial details are retained, while feature enhancement focuses on highlighting relevant image features. Additionally, the fusion process should suppress noise and artifacts, ensuring that the final image is clear and informative.

Several mathematical approaches have been proposed for implementing F(I1, I2), including wavelet-based fusion, Principal Component Analysis (PCA), and deep learning models. The following sections discuss these techniques in detail.

Wavelet transform-based image fusion

The wavelet transform is widely used in image fusion due to its ability to analyze images at multiple scales while preserving both high-frequency (edges and fine details) and low-frequency (background) components. The Discrete Wavelet Transform (DWT) decomposes an image into sub-bands at different resolution levels, allowing selective feature extraction and fusion. Given an input image I (x, y), the wavelet decomposition results in four sub-band representations: approximate low-frequency coefficients WL, horizontal detail coefficients , vertical detail coefficients , and diagonal detail coefficients .

For two source images I1 and I2, their wavelet representations are given by:

(3)

Eq. 3 represents the decomposition of the two input images. The fusion process then applies different fusion rules for the components. The low-frequency components are typically combined using a weighted averaging approach:

(4)

while the high-frequency components are merged using a maximum selection rule:

(5)

These fusion rules, expressed in Eq. 2 and 3, ensure that essential structural and textural details are preserved. The parameter α\alphaα controls the contribution of each image to the final fused result. Once the fusion process is complete, the final fused image is reconstructed by applying the Inverse Wavelet Transform (IWT):

(6)

which is given in Eq. 6. Wavelet-based fusion is particularly effective in medical imaging applications as it enhances contrast while minimizing noise, making it suitable for combining modalities such as CT and PET or MRI and ultrasound.

Principal component analysis for image fusion

Principal Component Analysis (PCA) is a statistical method commonly used in image fusion for dimensionality reduction [22]. This technique transforms images into a new feature space and selects the most significant components for fusion. Suppose two source images I1, and I2 contain N pixels each. These images can be reshaped into column vectors and combined into a data matrix X:

(7)

as represented in Eq. 7 . The covariance matrix of X is then computed as:

(8)

Eq.8 captures the variance between the image components. The eigenvectors U of C are computed by solving:

CU = λU (9)

where λ represents the eigenvalues associated with each eigenvector Eq. 9. The fused image is then obtained by projecting the input images onto the principal components:

If = UT X (10)

which is given by Eq. 10. PCA-based fusion effectively enhances contrast and eliminates redundant information, making it a practical approach for combining CT and PET or MRI and ultrasound images.

Deep learning-based image fusion

Recent advances in deep learning have enabled data-driven fusion techniques that automatically learn feature representations from multi-modal images. Unlike traditional methods that rely on predefined mathematical rules, Convolutional Neural Networks (CNNs) extract essential features and optimize fusion processes.

In a typical CNN-based fusion model, the input images I1, and I2 are processed through convolutional layers to extract feature maps:

F1 = CNN(I1), F2 = CNN(I2) (11)

as shown in Eq. 11. The extracted features are then combined using a fusion function G:

Ff = G(F1,F2) (12)

where G can take various forms, such as max selection, weighted summation, or attention-based fusion Eq. 12. The fused feature map is then passed through a decoder network to reconstruct the final fused image:

If = CNN–1 (Ff) (13)

Eq. 13 represents the reconstruction process. Deep learning fusion methods automatically optimize fusion strategies, enhancing texture, contrast, and spatial details. This makes them particularly effective for MRI-ultrasound fusion and complex cardiovascular imaging applications.

Performance evaluation of fusion methods

To assess the effectiveness of different fusion techniques, several quantitative metrics are commonly used. The Structural Similarity Index (SSIM) measures structural preservation and is defined in Eq. 14:

(14)

Another key metric is the Peak Signal-to-Noise Ratio (PSNR), given by:

(15)

Eq. 15 evaluates noise suppression and image clarity. Additionally, entropy is used to quantify the amount of retained information in Eq. 16:

(16)

Higher entropy values indicate improved information retention in the fused image.

By applying these performance metrics, different fusion methods can be systematically compared to determine their effectiveness in medical imaging applications.

Different image fusion techniques have their own advantages and limitations. Wavelet transform preserves both high and low-frequency details, making it effective for structural preservation, though it is computationally expensive. PCA fusion reduces redundancy by focusing on dominant features, but it may lose fine details. Deep learning-based methods adaptively learn fusion rules and enhance image quality but require large datasets and computational resources. A comparative analysis of these methods is summarized in Table 1.

| Table 1: Comparative Analysis of Image Fusion Techniques. | ||

| Fusion Method | Advantages | Limitations |

| Wavelet Transform | Effectively preserves structural details and frequency components, making it suitable for medical imaging applications. | Computationally expensive and may introduce artifacts at sharp transitions. |

| Principal Component Analysis (PCA) | Reduces data redundancy and enhances contrast by extracting dominant features. | May lose fine details, especially in complex multi-modal images. |

| Deep Learning-based Fusion | Automatically learns fusion rules, enhancing texture and feature representation. Works well for complex medical images. | Requires a large dataset for training and significant computational resources. |

Applications in cardiovascular imaging

Cardiovascular imaging is essential for diagnosing and monitoring heart-related diseases. Since no single imaging modality can fully capture the structure, function, and dynamics of the heart, image fusion techniques are employed to integrate complementary information. This leads to enhanced visualization and improved diagnostic accuracy. Key applications of image fusion in cardiovascular imaging include coronary artery visualization, cardiac function assessment, and hemodynamic flow analysis.

Visualization of coronary arteries

Coronary Artery Disease (CAD) is a major cause of morbidity and mortality, requiring early detection of arterial narrowing and reduced blood supply. Different imaging techniques have limitations: computed Tomography Angiography (CTA) provides high-resolution images but lacks functional data, while Positron Emission Tomography (PET) highlights ischemic regions but lacks anatomical precision [23,24]. A promising approach is the fusion of CTA and PET images, which combines the anatomical details of CTA with the metabolic insights from PET.

Let ICT (x, y) and IPET (x, y) represent the CTA and PET images, respectively. The fused image Ifused is obtained using a weighted fusion approach shown in Eq. 17:

(17)

where α is a weighting factor optimized for clinical relevance. CTA contributes high-resolution anatomical details, while PET adds functional information regarding myocardial perfusion.

By integrating these modalities, clinicians can simultaneously assess arterial plaques and ischemic regions, enabling better risk stratification and informed decision-making regarding interventions such as stenting or bypass surgery.

IMRI and ultrasound IUS images are computed to capture the correlation between the modalities. Next, the eigenvectors (U) of C are extracted, and both images are projected onto these principal components using the transformation in Eq. 18:

(18)

Cardiac function assessment

Evaluating cardiac function is crucial for diagnosing heart failure, cardiomyopathies, and valvular diseases. Magnetic Resonance Imaging (MRI) provides high-resolution anatomical data but lacks real-time imaging capabilities. Conversely, ultrasound (echocardiography) offers real-time assessment of cardiac motion but is limited by depth resolution. Combining MRI and Doppler ultrasound enables simultaneous visualization of myocardial structure and blood flow dynamics [25].

The fusion process employs Principal Component Analysis (PCA) to extract essential features from both MRI and ultrasound images. The covariance matrix C of the MRI image IMRI and ultrasound image IUS is computed as:

(19)

where I..MRI and I I..US represent the mean intensities of the respective images. The eigenvectors U of C are used to transform the images into principal components, leading to the fused image:

(20)

This fused representation enhances visualization of myocardial contraction, ventricular wall motion, and blood flow, improving diagnostic accuracy.

Hemodynamic flow analysis

Analyzing blood flow in the heart is critical for detecting conditions such as aortic stenosis and mitral valve regurgitation. Doppler ultrasound captures real-time flow but lacks depth resolution, while MRI Phase-Contrast Imaging (PC-MRI) provides velocity maps but is time-consuming [26]. By fusing Doppler ultrasound with PC-MRI, a comprehensive velocity field can be obtained.

Let VUS (x, y, t) and VMRI (x, y, t) denote the velocity fields from Doppler ultrasound and PC-MRI, respectively. The fused velocity field is given by Eq. 21:

(21)

This fusion enhances the detection of abnormal blood flow patterns, assisting in diagnosing valvular diseases and predicting stroke risk by identifying turbulent flow regions.

Image fusion also plays a vital role in interventional cardiology. For example, integrating Computed Tomography (CT) with ultrasound aids in precise valve placement during Transcatheter Aortic Valve Replacement (TAVR). Similarly, combining fluoroscopy with MRI assists in catheter-based ablation procedures, ensuring precise navigation of cardiac structures. Table 2 summarizes key applications of image fusion in cardiovascular imaging.

| Table 2: Applications of Image Fusion in Cardiovascular Imaging. | ||

| Application | Modalities Used | Key Benefits |

| Coronary Artery Visualization | CT + PET | Combines anatomical and functional data |

| Cardiac Function Assessment | MRI + Ultrasound | Enhances real-time motion visualization |

| Hemodynamic Flow Analysis | Doppler + PC-MRI | Provides comprehensive blood flow mapping |

| Guided Cardiac Interventions | Fluoroscopy + MRI | Improves catheter navigation accuracy |

Future directions in cardiovascular image fusion

Despite significant advancements, challenges in cardiovascular image fusion remain, such as computational demands, accurate image registration, and the integration of Artificial Intelligence (AI) for real-time processing. Future research should focus on AI-assisted fusion models, hybrid imaging devices, and personalized diagnostics tailored to patient-specific anatomy and physiology. These developments aim to enhance real-time imaging, improve diagnostic precision, and optimize treatment planning in cardiology.

Image fusion continues to improve cardiovascular diagnostics by integrating anatomical, functional, and hemodynamic data. Further advancements in AI-driven fusion and automated image registration will contribute to a more efficient and patient-specific cardiac care summary as shown in Table 3.

| Table 3: Summary of Challenges and Future Research Directions. | ||

| Challenge | Current Limitations | Future Research Directions |

| Image Registration | Misalignment between modalities | AI-driven automatic registration |

| Noise and Resolution Differences | PET/Ultrasound suffers from low SNR | AI-based super-resolution models |

| Computational Complexity | Deep learning methods require high-power | Edge computing & Quantum AI |

| Evaluation Metrics | Lack of clinically relevant metrics | Task-specific fusion evaluation |

| Clinical Implementation | Fusion not integrated into hospital workflows | PACS-integrated real-time fusion |

Challenges in cardiovascular image fusion

Cardiovascular image fusion has revolutionized how medical professionals diagnose and treat heart conditions. By combining information from different imaging modalities, such as CT, MRI, PET, and ultrasound, clinicians gain a more comprehensive view of the cardiovascular system. However, despite these advancements, several challenges remain, preventing widespread clinical adoption. These challenges span technical, computational, and clinical aspects, requiring innovative solutions to improve accuracy, efficiency, and usability. This section explores some of the key obstacles in cardiovascular image fusion and potential ways to address them.

Image registration and spatial misalignment

One of the biggest challenges in cardiovascular image fusion is aligning images accurately. Different imaging techniques capture the heart from various angles and at different resolutions, making it difficult to achieve perfect alignment. For example, CT and MRI produce detailed static images, while ultrasound and PET are more prone to motion-related distortions. If images are misaligned, the resulting fusion may be blurry or misleading, reducing its reliability in clinical settings [27].

To tackle this issue, researchers have developed methods such as feature-based registration, which aligns images based on anatomical landmarks, and mutual information maximization, which ensures that shared content between images is properly matched. AI-driven deep learning models are also emerging as powerful tools for automatic image alignment. Future advancements will likely focus on real-time AI registration techniques that require minimal human intervention and hybrid approaches combining traditional and deep-learning-based methods for improved precision.

Variability in image quality and noise

Another challenge in image fusion is the significant difference in quality between imaging modalities. While CT and MRI provide high-resolution images, PET and ultrasound often suffer from lower Signal-to-Noise Ratios (SNR), making it difficult to merge images seamlessly. Differences in contrast, brightness, and resolution can lead to suboptimal fusion results [28].

Current solutions involve multi-scale wavelet-based denoising, which reduces noise while preserving important image details, and histogram equalization, which normalizes contrast levels across modalities. Deep learning-based super-resolution techniques are also being used to enhance low-quality images before fusion. Moving forward, researchers aim to develop adaptive noise reduction methods that preserve clinically relevant details and AI-powered super-resolution techniques tailored for PET and ultrasound images.

Computational complexity and processing time

Cardiovascular image fusion, particularly when deep learning techniques are involved, requires substantial computational power. Real-time fusion is essential for interventional procedures, yet current methods often experience delays due to processing constraints [29,30]. These delays can limit the practical application of fusion technology in critical medical situations.

To address this, researchers have turned to parallel processing and GPU acceleration to speed up computations. Compressed sensing techniques are also being explored to reduce computational load without compromising quality. Future developments may include edge computing solutions that enable real-time fusion on portable ultrasound and MRI machines, as well as the potential use of quantum computing for medical image processing.

Lack of standardized evaluation metrics

Assessing the quality of fused images is not always straightforward, as commonly used metrics like PSNR (Peak Signal-to-Noise Ratio) and SSIM (Structural Similarity Index) do not necessarily reflect clinical usefulness. Different radiologists may have varying preferences for what constitutes a good fusion result, making standardization difficult.

Some current approaches include task-based evaluation metrics, which assess the effectiveness of fused images in improving diagnostic accuracy, and machine learning classifiers trained on expert-annotated data to automate fusion quality assessments [31]. Future research will likely focus on developing clinically driven evaluation metrics that prioritize diagnostic performance over pixel-level similarity and incorporating radiologist feedback into AI-driven quality assessment models.

Real-time implementation in clinical workflows

Most existing fusion techniques are designed for offline processing, which limits their use in emergency situations where rapid decision-making is crucial. Integrating image fusion into clinical workflows, such as PACS (Picture Archiving and Communication Systems), remains a challenge [32].

Current solutions include cloud-based fusion systems that allow remote access and real-time collaboration among healthcare professionals. AI-driven decision support systems are also being developed to automatically interpret fused images and assist clinicians. The future of real-time fusion lies in edge-based algorithms that can be embedded directly into imaging devices, enabling instant fusion without the need for extensive post-processing. Additionally, standardizing AI-driven fusion software across different hospitals and imaging platforms will be key to widespread adoption.

Future directions in cardiovascular image fusion

To push the field forward, researchers must focus on automation, clinical validation, and AI-driven optimization. Below are some key areas of future development:

- AI and deep learning for fully automated image fusion: Many current fusion methods require manual fine-tuning. The future goal is to develop self-learning AI models that can automatically determine the best fusion approach for each patient, adapting to different imaging conditions without human intervention.

- Multi-modal fusion for personalized cardiac diagnosis: Existing fusion techniques are often one-size-fits-all, but patient-specific variations in anatomy and physiology must be accounted for. Future advancements will involve adaptive AI models trained on personalized data, with fusion strategies customized based on age, gender, and medical history.

- Real-time image fusion for interventional cardiology: Real-time feedback during procedures is critical but currently limited by processing delays. The future lies in low-latency fusion techniques enabled by edge computing and lightweight neural networks optimized for speed.

- Hybrid imaging modalities for direct multi-modal fusion: Today’s fusion methods rely on separate image acquisitions, which require manual alignment. A major step forward would be the development of dual-modality imaging systems, such as combined MRI + ultrasound scanners, which would capture multi-modal data simultaneously and eliminate the need for post-processing alignment.

- Explainable AI for trustworthy image fusion in healthcare: Many AI-based fusion models function as black boxes, making it difficult for clinicians to trust their decisions. Future AI systems must incorporate explainability features, such as attention-based models that highlight key regions of interest and clinician-in-the-loop frameworks that provide transparent insights alongside fused images.

Table 3 provides a summary of key challenges in multimodality cardiac imaging and outlines potential future research directions to address these limitations.

While cardiovascular image fusion holds great promise for improving diagnostics and treatment planning, several technical and clinical challenges must be overcome. Future innovations in AI, real-time processing, hybrid imaging, and explainable AI will be crucial in making fusion a standard practice in cardiology. By addressing these challenges, cardiovascular imaging will move toward a future where fusion-based diagnostics are fully automated, highly personalized, and seamlessly integrated into clinical workflows, ultimately improving patient care and outcomes.

Cardiovascular Diseases (CVDs) remain a leading cause of mortality worldwide, highlighting the need for improved diagnostic imaging. This paper explored various image fusion techniques that combine complementary modalities like CT, MRI, PET, and ultrasound to enhance visualization, accuracy, and clinical decision-making. By integrating structural and functional imaging, fusion techniques provide a more comprehensive assessment of cardiovascular conditions.

We examined key fusion methodologies, including wavelet transform-based fusion for preserving spatial and frequency details, PCA for dimensionality reduction, and deep learning approaches such as CNNs for automated feature extraction. Applications like CT-PET fusion for detecting arterial blockages, MRI-ultrasound fusion for real-time cardiac function analysis, and Doppler ultrasound-PC MRI fusion for assessing blood flow patterns were discussed, demonstrating the clinical potential of these techniques.

Despite these advancements, challenges remain. Computational complexity, misalignment issues, and the need for manual parameter tuning limit widespread adoption. The development of real-time AI-driven fusion models and dual-modality scanners can address these barriers, improving efficiency and accuracy. Additionally, ensuring the transparency of deep learning models will enhance clinical trust and usability.

Image fusion represents a transformative shift in cardiovascular imaging, enabling precise, non-invasive diagnostics and improving treatment planning. As technology advances, AI-driven real-time fusion and hybrid imaging systems will further revolutionize cardiovascular care, ultimately enhancing patient outcomes and reducing the global burden of CVDs.

- Zhu J, Liu H, Liu X, Chen C, Shu M. Cardiovascular disease detection based on deep learning and multi-modal data fusion. Biomed Signal Process Control. 2025;99:106882. Available from: http://dx.doi.org/10.1016/j.bspc.2024.106882

- Helck A, D'Anastasi M, Notohamiprodjo M, Thieme S, Sommer W, Reiser M, et al. Multimodality imaging using ultrasound image fusion in renal lesions. Clin Hemorheol Microcirc. 2012;50(1-2):79-89. Available from: https://doi.org/10.3233/ch-2011-1445

- Wong KKL, Fortino G, Abbott D. Deep learning-based cardiovascular image diagnosis: a promising challenge. Future Gener Comput Syst. 2020;110:802-11. Available from: https://www.eleceng.adelaide.edu.au/personal/dabbott/publications/FGC_wong2019.pdf

- Song Y, Du X, Zhang Y, Li S. Two-stage segmentation network with feature aggregation and multi-level attention mechanism for multi-modality heart images. Comput Med Imaging Graph. 2022;97:102054. Available from: https://doi.org/10.1016/j.compmedimag.2022.102054

- Pohl C, Nazirun NNN, Hamzah NA, Tamin SS. Multimodal medical image fusion in cardiovascular applications. Med Imaging Technol Rev Comput Appl. 2015;91-109. Available from: http://dx.doi.org/10.1007/978-981-287-540-2_4

- Trivedi GJ, Sanghvi RC. A new approach for multimodal medical image fusion using PDE-based technique. Suranaree J Sci Technol. 2023;30(4):030132(1-7). Available from: https://doi.org/10.55766/sujst-2023-04-e0843

- Yadav SP, Yadav S. Image fusion using hybrid methods in multimodality medical images. Med Biol Eng Comput. 2020;58(4):669-87. Available from: https://doi.org/10.1007/s11517-020-02136-6

- Zhang H, Zhang P, Wang Z, Chao L, Chen Y, Li Q. Multi-feature decision fusion network for heart sound abnormality detection and classification. IEEE J Biomed Health Inform. 2023;28(3):1386-97. Available from: https://doi.org/10.1109/jbhi.2023.3307870

- Kibria HB, Matin A. An efficient machine learning-based decision-level fusion model to predict cardiovascular disease. In: Intelligent Computing and Optimization: Proceedings of the 3rd International Conference on Intelligent Computing and Optimization 2020 (ICO 2020). Springer Int Publ. 2021;1097-1110. Available from: https://doi.org/10.1016/j.compbiolchem.2022.107672

- Trivedi G, Sanghvi RC. Automated multimodal fusion with PDE preprocessing and learnable convolutional pools. ADBU J Eng Technol. 2024;13(1):0130104066. Available from: https://www.utgjiu.ro/math/sma/v19/a19_11.html

- Trivedi G, Sanghvi RC. Infrared and visible image fusion using multi-scale decomposition and partial differential equations. Int J Appl Comput Math. 2024;10(1):133(1–16). Available from: https://doi.org/10.1007/s40819-024-01768-8

- Trivedi G. MDWT: A Modified Discrete Wavelet Transformation-Based Algorithm for Image Fusion. In: Tahir MB, editor. Beyond Signals - Exploring Revolutionary Fourier Transform Applications. Rijeka: IntechOpen; 2024. Available from: https://doi.org/10.5772/intechopen.1006477

- Priyanka M, Anusha T, Srilakshmi K, Chandana R, Srikanth MV. Optimum weighted medical image fusion using non sub-sampled contourlet transform (NSCT) for brain tumour detection. J Eng Sci. 2023;14(4).

- Zhang F, Gong L. Investigation on super-resolution reconstruction of lung CT images for COVID-19 based on sequential images. Biomed Signal Process Control. 2025;102:107424. Available from: https://doi.org/10.1016/j.bspc.2024.107424

- Asadi S, Kumar AVS, Agrawal A. Enhancing cardiovascular disease detection and prediction: a hybrid CNN-RNN approach to overcome common limitations. In: Spatially Variable Genes in Cancer: Development, Progression, and Treatment Response. IGI Global Sci Publ. 2025;361-80. Available from: http://dx.doi.org/10.4018/979-8-3693-7728-4.ch013

- Srinivasan SM, Sharma V. Applications of AI in cardiovascular disease detection—a review of the specific ways in which AI is being used to detect and diagnose cardiovascular diseases. In: AI in Disease Detection: Advancements and Applications. 2025;123-46. Available from: https://pure.psu.edu/en/publications/applications-of-ai-in-cardiovascular-disease-detection-a-review-o

- Trivedi GJ, Sanghvi RC. Medical image fusion using CNN with automated pooling. Indian J Sci Technol. 2022;15(42):2267–74. Available from: https://doi.org/10.17485/IJST/v15i42.1812

- Parlati ALM, Nardi E, Marzano F, Madaudo C, Di Santo M, Cotticelli C, et al. Advancing cardiovascular diagnostics: the expanding role of CMR in heart failure and cardiomyopathies. J Clin Med. 2025;14(3):865. Available from: https://doi.org/10.3390/jcm14030865

- Wang K, Tan B, Wang X, Qiu S, Zhang Q, Wang S, et al. Machine learning-assisted point-of-care diagnostics for cardiovascular healthcare. Bioeng Transl Med. 2025:e70002. Available from: https://doi.org/10.1002/btm2.70002

- Tara T, Kusum K, Islam MH, Sugi T. ECG-based human activity-specific cardiac pattern detection using machine-learning and deep-learning models. J Electrocardiol. 2025;153899. Available from: https://doi.org/10.1016/j.jelectrocard.2025.153899

- Robert C, Tan WY, Ling LH, Hilal S. Association of arterial structure and function with incident cardiovascular diseases and cognitive decline. Alzheimers Dement (Amst). 2025;17(1):e70069. Available from: https://doi.org/10.1002/dad2.70069

- Trivedi GJ, Sanghvi RC. Optimizing image fusion using modified principal component analysis algorithm and adaptive weighting scheme. Int J Adv Netw Appl. 2023;15(1):5769-74. Available from: http://dx.doi.org/10.35444/IJANA.2023.15103

- Liu Y, Chen X, Liu X, Yu H, Zhou L, Gao X, et al. Accuracy of non-gated low-dose non-contrast chest CT with tin filtration for coronary artery calcium scoring. Eur J Radiol Open. 2022;9:100396. Available from: https://doi.org/10.1016/j.ejro.2022.100396

- Mayo Clinic. Ejection fraction: An important heart test [Internet]. [cited 2025 Feb 10]. Available from: https://www.mayoclinic.org/ejection-fraction/expert-answers/faq-20058286

- Mayo Clinic. Heart valve disease - Diagnosis and treatment [Internet]. [cited 2025 Feb 10]. Available from: https://www.mayoclinic.org/diseases-conditions/heart-valve-disease/diagnosis-treatment/drc-20353732

- American Heart Association. Testing for heart valve problems [Internet]. [cited 2025 Feb 10]. Available from: https://www.heart.org/en/health-topics/heart-valve-problems-and-disease/getting-an-accurate-heart-valve-diagnosis/testing-for-heart-valve-problems

- Medrano-Gracia P, Cowan BR, Suinesiaputra A, Young AA. Challenges of cardiac image analysis in large-scale population-based studies. Curr Cardiol Rep. 2015;17(3):563. Available from: https://doi.org/10.1007/s11886-015-0563-2

- Daubert MA, Tailor T, James O, Shaw LJ, Douglas PS, Koweek L. Multimodality cardiac imaging in the 21st century: evolution, advances and future opportunities for innovation. Br J Radiol. 2021;94(1117):20200780. Available from: https://doi.org/10.1259/bjr.20200780

- Mastrodicasa D, van Assen M, Huisman M, Leiner T, Williamson EE, Nicol ED, et al. Use of AI in Cardiac CT and MRI: A Scientific Statement from the ESCR, EuSoMII, NASCI, SCCT, SCMR, SIIM, and RSNA. Radiology. 2025;314(1). Available from: https://doi.org/10.1148/radiol.240516

- Haulon S, Fabre D, Mougin J, Charbonneau P, Girault A, Raux M, et al. Image fusion for vascular procedures: The impact and continuing development of image fusion for planning and guiding vascular repair. Vascular. 2020. Available from:https://evtoday.com/articles/2020-jan/image-fusion-for-vascular-procedures

- Weissman N, Soman P, Shah D. Multimodality imaging: Opportunities and challenges. J Am Coll Cardiol Img. 2013;6(9):1022–3. Available from: https://doi.org/10.1016/j.jcmg.2013.07.003

- Li L, Ding W, Huang L, Zhuang X, Grau V. Multi-modality cardiac image computing: A survey [Internet]. arXiv:2208.12881 [eess.IV]. 2022 Aug 26 [cited 2025 Mar 3]. Available from: https://doi.org/10.48550/arXiv.2208.12881